Turn AI Skeptics Into Power Users

Pilot programs don't change habits. Developer-to-developer sessions do. We've rebuilt our entire workflow around AI tools. Let us show your team what daily adoption actually looks like.

Pilot programs don't change habits. Developer-to-developer sessions do. We've rebuilt our entire workflow around AI tools. Let us show your team what daily adoption actually looks like.

Your developers know AI coding assistants exist. Some tried GitHub Copilot for a week. A few experimented with Cursor. But knowing the tools exist and actually using them every day are two different things.

The obstacle isn't skepticism about whether the tools work. It's the friction of changing established habits when there's real work to deliver. Your internal advocacy helps, but there's only so far "leadership says we should use this" can go.

What moves the needle is hearing from developers who've already made the shift. Practitioners who can speak to the real workflow changes, answer the skeptical questions, and show (not tell) what fluency looks like in practice.

That's what we do.

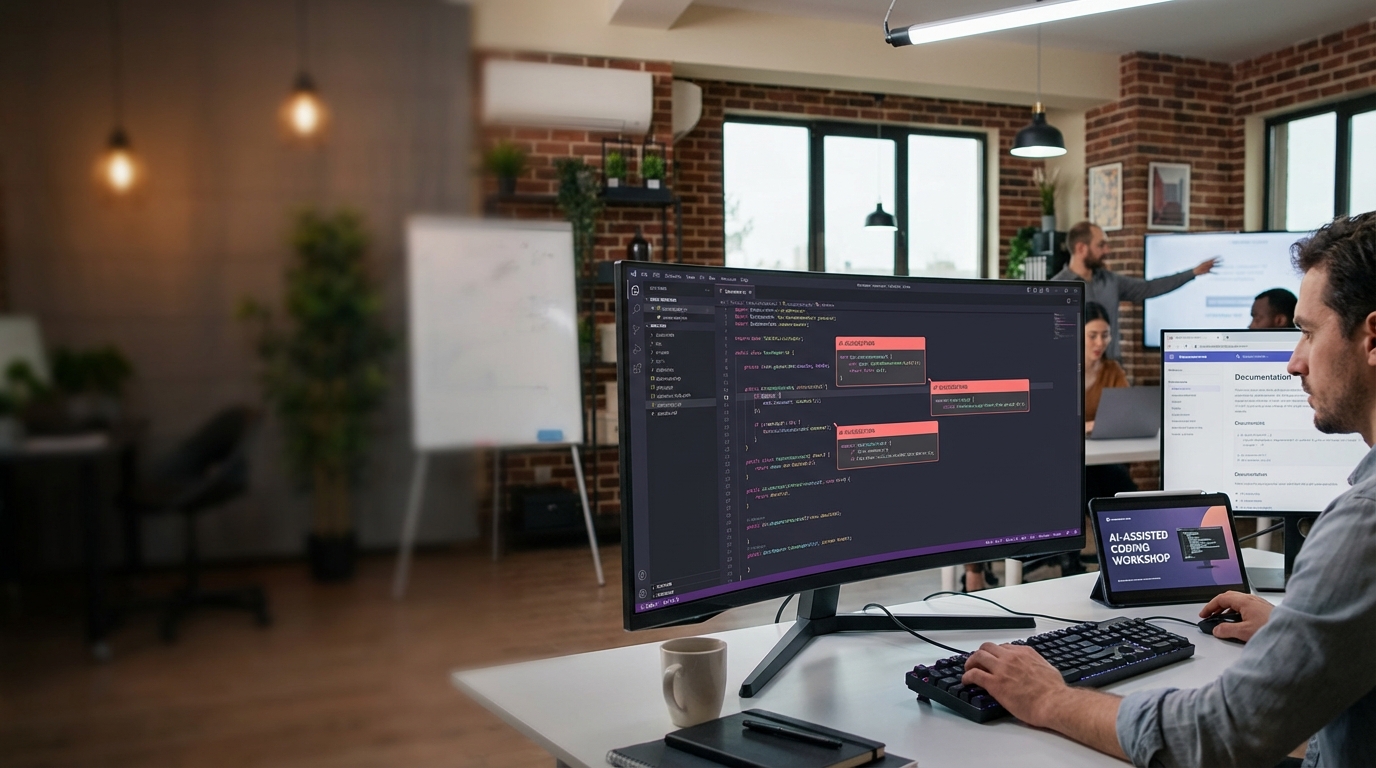

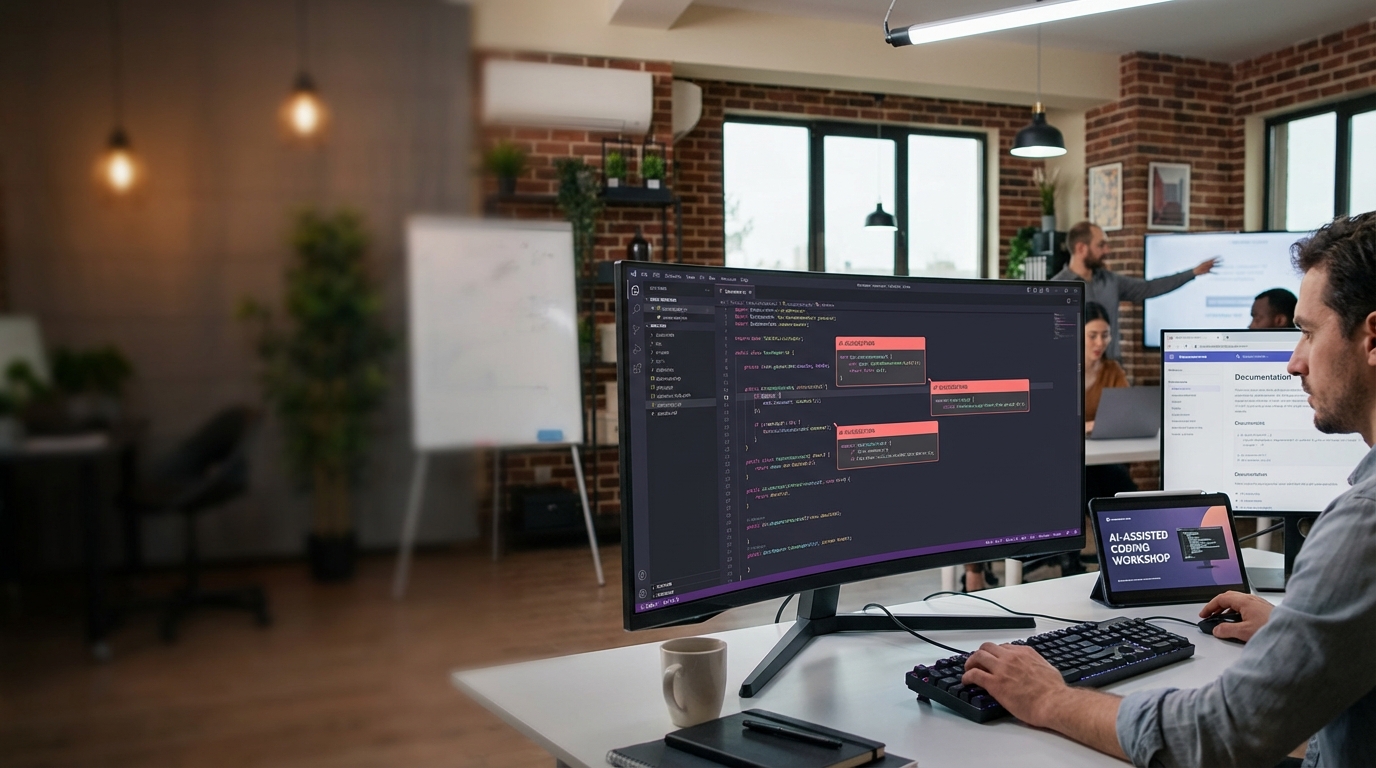

Not a demo. A working session.

We don't show polished demos with cherry-picked examples. We show how we actually use these tools: the prompting patterns that work, the ones that don't, and how to recover when the AI gives you garbage.

Abstract examples don't land. We work in your languages, your IDEs, your codebase patterns. If your team writes Python microservices, that's what we code. If they're in React Native, we're in React Native.

The engineers who haven't adopted yet usually have specific objections. "It slows me down." "The suggestions are wrong half the time." "I don't trust it with our security-sensitive code." We've heard them all, and we address them directly.

Watching someone else code isn't the same as doing it yourself. Sessions include structured exercises where your team uses the tools on real problems with us in the room to troubleshoot.

Tailored to your team

Best for teams that have some exposure but haven't built consistent habits. Covers core workflows, live demos, and guided practice.

Deep dive for teams starting fresh or needing comprehensive coverage across multiple tool categories (code generation, chat interfaces, agentic workflows). Includes extended hands-on practice.

Full-day session plus scheduled follow-up sessions over 2-4 weeks. Lets your team ask questions as they encounter real-world edge cases.

Before we scope a session, we'll ask:

How many developers? What's the mix of seniority levels?

Languages, IDEs, frameworks. We tailor examples to what your team actually uses.

Have you piloted Copilot, Cursor, or other tools? What worked? What didn't?

What's keeping adoption from happening organically? Security concerns? Workflow friction? Skepticism?

We're not AI consultants. We're developers who use these tools daily.

We've integrated AI into our actual client delivery: not as an experiment, but as how we work. Discovery engagements that used to produce wireframes now produce working prototypes. Codebases that used to take weeks to understand take days.

We've made the mistakes. We know which prompting patterns waste time. We know when to trust the suggestions and when to throw them out. We're not selling tools—we're sharing what actually works.

Tell us about your team, your tools, and what's blocking adoption. We'll put together a proposal that fits.

Get a Fluency Session EstimateCommon questions about AI Developer Fluency sessions.

They solve different problems. Copilot excels at inline suggestions while you type. Cursor is stronger for chat-based interactions and larger context windows. We can cover both, or focus on whichever you've already invested in.

Sessions can use sanitized examples or work entirely within your environment under NDA. We're not there to see your code; we're there to teach your team.

Yes. We've run effective remote sessions via screen share and collaborative coding tools. In-person is better for larger groups, but remote works.

Depends on format and team size. Reach out and we'll put together a scoped estimate.

Learn how AI capabilities can improve your products, automate processes, and deliver valuable insights while maintaining a human-centered approach.

View DetailsView DetailsWe build software that works: fast, scalable, and ready to grow with you. Our engineering team focuses on reliability, security, and performance.

View DetailsView Details